You may have heard it before…”A new study proves…[insert absolute/extreme statement here],” or “There’s no evidence to support that claim.” These kinds of statements are often used as a way to validate our own personal ideas/perceptions/opinions, or to put down the ideas of others. It is common, not only in social media and everyday conversations with our friends or family, but also in the world of conservative therapy, rehabilitation, as well as strength and conditioning. This line of thinking gets us into trouble when we try to equate science to Truth. Science is the study of the physical world through repeated observation and experiment. Truth can be defined as a reality, fact, or belief. Truths vary between individuals because they are dependent upon each individual’s unique perception. Therefore, we need to separate this search for Truth from the scientific domain and instead use science and research as a resource, rather than as a black or white absolute.

How we choose to interpret research findings can have real world implications, especially if you’re a health care provider giving advice to an athlete/client/patient. If you don’t have degrees in epidemiology, research methodology, and statistics (most health practitioners, myself included, do not!), evaluating and understanding research can be a daunting task. Furthermore, your ability to confidently make decisions based on the research you read may be compromised. Finally, if you are unaware of the potential limitations of how you interpret research, you may be inadvertently causing harm to those whom you are giving advice.

In our paradigm, we strive to utilize research as a foundation for the concepts we teach in our courses, while minimizing the effects of our own, albeit sometimes unavoidable, biases. The following ideas describe our perspective and how we read/interpret/utilize research. It is a good basis (in my opinion!) for becoming a RRR – Reasonable Research Regurgitator! A RRR is not a professional researcher, but rather a person who attempts to utilize (regurgitate) other people’s research to further their understanding of a particular topic, without overstating the results of the study. Furthermore, a RRR understands that one of the most important considerations when trying to apply any study to the real world is context!

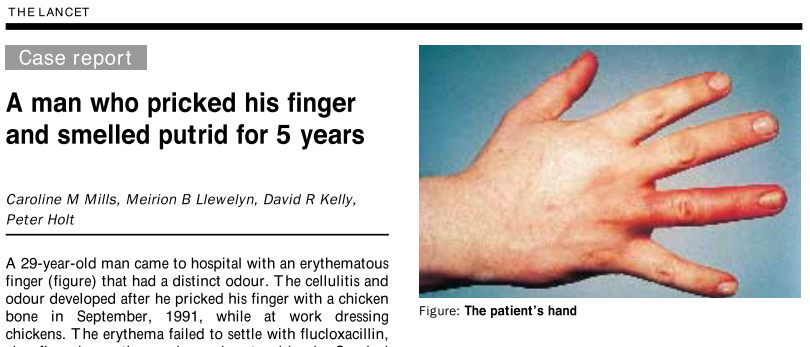

The randomized controlled trial (RCT), often considered the research gold standard, is our most reasonable method for suggesting causation (1). However, this form of research may not always be warranted and/or possible to conduct. For example, a systematic review of RCTs looked at the effect of parachutes to prevent death related to gravitational challenge (2). The search methods included human studies comparing participants who use parachutes to those who do not use parachutes when jumping from greater than 100 meters. Hopefully by now you realize that this RCT does not, and cannot exist! The widely accepted notion that parachutes prevent death is based purely on observational data. To say that we should not accept parachutes as an intervention due to the absence of a supporting RCT would be ludicrous! While this is an extreme (and fictional) example, the scenario is similar for conservative treatments (e.g. manual therapy) that can’t provide ideal control methods due to ethical or technological limitations (further limitations of manual therapy research in a future post). On the other end of the research spectrum, near the bottom of the research pyramid, is the case report. Most would agree that making a clinical decision based solely off of a case report would not constitute reasonable, evidence-informed practice. However, there can be a time and place where the case report is of value…just ask the next person to have a putrid smelling finger for five years with no known cure (https://www.thelancet.com/pdfs/journals/lancet/PIIS0140-6736(96)06408-2.pdf) (3). Even when you’ve chosen the correct research methods, other considerations include internal/external validity, various forms of bias, and choice of statistical methods, to name just a few. Unfortunately, reading abstracts doesn’t make you an evidence-based practitioner!

How do I use research in reality?

For the average busy practitioner, it is most valuable to look at research in the context of the athlete/patient in front of you. The better matched your athlete is to the research question and patient population of the study, the more confident you’ll feel considering the results (i.e. Don’t look at a study on knee pain in 65-year-old males and try to directly extrapolate this to your 16-year-old female basketball player!). Nonetheless, it is unlikely that a single paper will be used to guide your clinical practice. Most literature you read will continuously expand and build your unique treatment/training philosophy, so take each new piece of information and think about how it expands or alters your current practice methods and line of thinking.

In the AMA seminars, we look at “the evidence” as a constantly moving target and we use it to regularly inform, modify, and update our theoretical and practical material. The evidence in kinematics/kinetics, biomechanics, movement science, strength and conditioning, and motor learning are rapidly growing fields, and as such, continuous monitoring is essential to staying current.

As self-proclaimed Reasonable Research Regurgitators, we are continuously working to better understand how to interpret research so that it can help inform our practice and teaching methods. Through AMA, we hope to challenge common ideas and think critically about the things we think we know, in the context of athletic movement. Please feel free to connect with us on any relevant topic or better yet, come join the conversation in person at one of our seminars!

Patrick Welsh, Co-Director of AMA

1. Farrell B. Efficient management of randomised controlled trials: nature or nurture. BMJ. 1998;317(7167):1236-1239.

2. Smith GC, Pell JP. Parachute use to prevent death and major trauma related to gravitational challenge: systematic review of [randomized] controlled trials. Br Med J. 2003;327:1459-1461.

3. Mills CM, Llewelyn MB, Kelly DR, Holt P. A man who pricked his finger and smelled putrid for 5 years. Lancet. 1996;348:1996.